We introduced Code Review AI to make it easier for any team to rapidly iterate and solve problems from day one—developers should be spending their time on building features, not debugging existing code!

To better enhance your Developer Experience, we have made our Review AI conversational.

Now, when our Review AI bot provides your team with feedback, you can ask for clarification and deeper explanations if you're unsure about the feedback you've been presented.

What benefit does this provide?

Our goal is to introduce features that help your team reduce friction throughout your development workflow and improve the overall effectiveness of your software delivery process. The types of errors checked by our Review AI can vary greatly in size, but the long-term impact of any given error in your codebase is often disproportionate to its character count.

In the case of smaller errors (probable typos, using the incorrect variable, etc.), the impact can be significant and difficult to debug, mostly because these types of errors are prone to slipping through the cracks unnoticed. Of course, once a small error is pointed out, it’s usually easy to understand the nature of the mistake and decide how to remedy it.

When our Review AI surfaces larger potential issues inferred from the contents of your code patch—such as concerns about how the code deviates from the recommended best practices for your chosen framework or how inconsistent error handling might cause unexpected crashes—the issues don't always have an obvious path toward resolution.

Regardless of what type of error that our Review AI surfaces for your team, we've made it easier to improve your understanding about the context of the error and how it can be remediated.

How does it work?

After you’ve connected your GitHub account to the CTO.ai platform, you can add the CTO.ai Review label to a Pull Request in one of your repositories to summon our Review AI Bot for a first-pass review of your changes.

But let’s say the bot responds to your patch with potential problems and areas for improvement, but you’re unsure how the advice could actually be applied… What now? Just ask the bot to clarify its response!

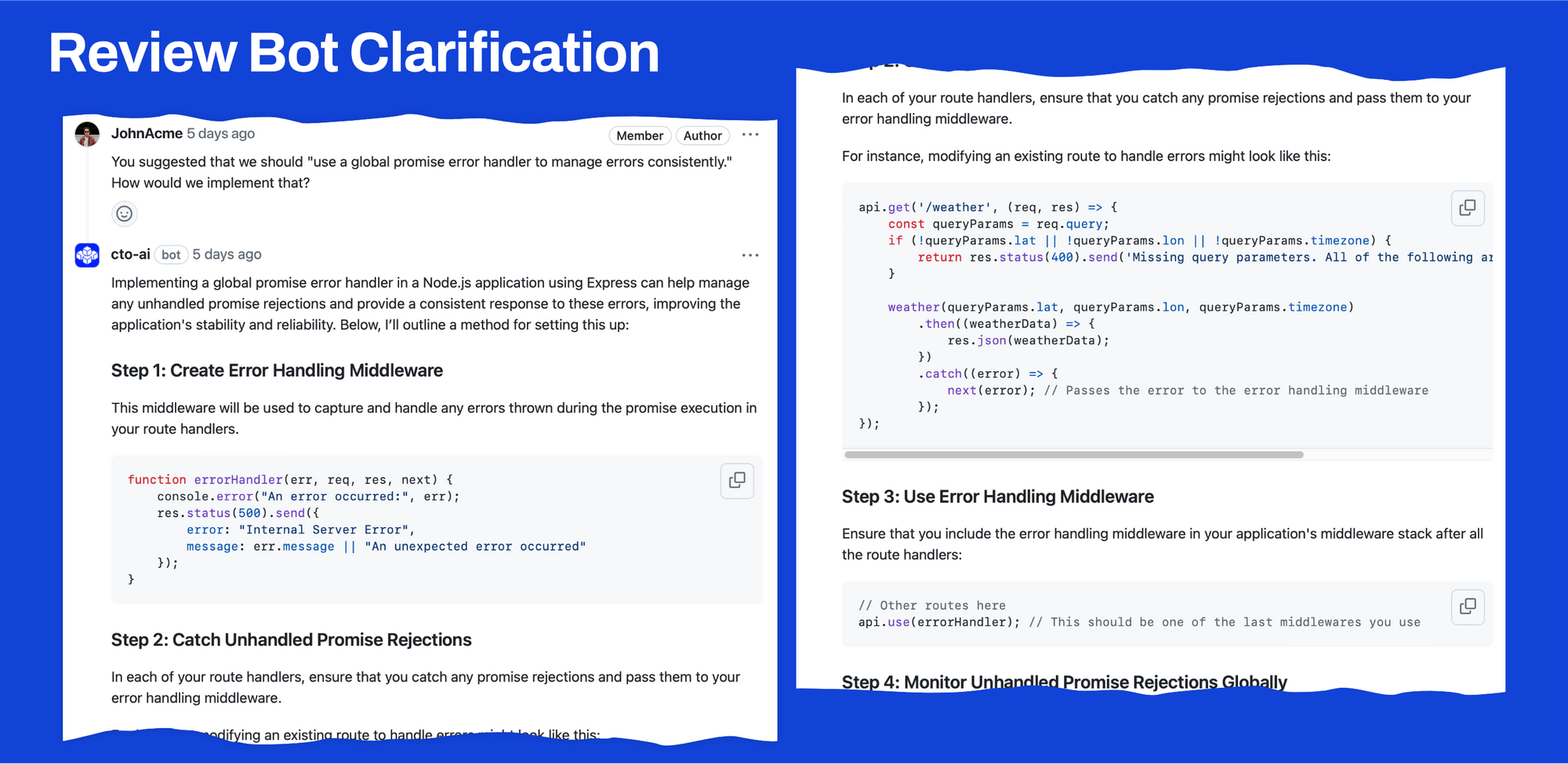

Consider, for example, the advice returned by our bot in one case with a recommendation to "use a global promise error handler to manage errors consistently"—it's specific enough to be useful, but not necessarily directly actionable.

With the Conversational Review feature of our Code Review AI, you can now ask the bot for clarification about its recommendations! Below is an example of the type of response the Review AI Bot might provide when asked for clarification:

As you can see in the example above, when asked for clarification about how we should implement a global promise error handler, the bot responds with a mini-tutorial for implementing such a handler in our specific context—in this case, an Express.js app. Included in this response is the code snippet for implementing the error handler and advice for how to integrate this pattern into the rest of the source code.

Watch it in action:

What’s next?

If you’re using our Commands, Pipelines, and Services workflows to orchestrate your cloud application infrastructure, keep an eye on our blog, as we’ll soon be adding features that make it easier to find a path to resolution when things don’t go according to plan at other points in your team’s development lifecycle.

Want to see this feature in action? Book a feature demo with one of our experts today!

Comments