Code Review AI Automates Second Opinions

We offer Code Review AI to help every team quickly get critical feedback that will help them identify small problems before they can have a larger impact.

This automation isn’t meant to replace peer-review of code changes from other members of your team—rather, this is intended to be an enhancement to your existing workflow, allowing your team to rapidly get actionable feedback before someone else even reviews the Pull Request.

Configuration

Before you can use our Code Review AI feature, you will need to connect your GitHub account to the CTO.ai platform.

Additionally, you will need to create a new label in any repo where you wish to use our code review feature: CTO.ai Review. A new label can be added from the sidebar of any Pull Request in the relevant repository (or by adding the /labels path to the end of your repository url).

Usage

To summon the CTO.ai Bot and trigger an automated code review, add the CTO.ai Review label to any Pull Request which you would like to have reviewed. That’s all it takes!

Once the appropriate label is added to a Pull Request, each change added to that PR will be reviewed for common errors by the Code Review AI Bot.

Example

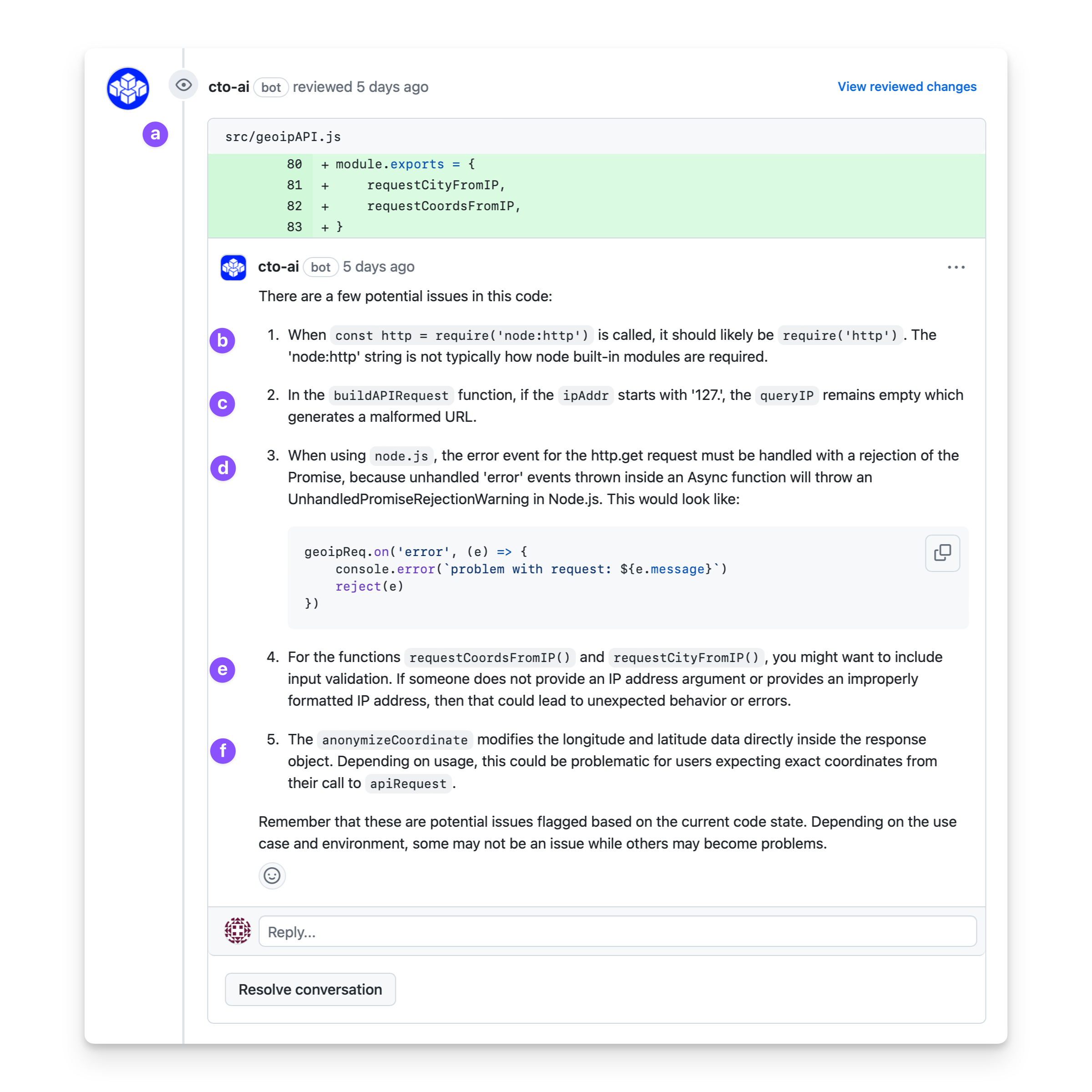

In this example, you can see how the automated code review picks up on the types of mistakes that might go unnoticed today, but which have the potential to cause major problems down the road:

Here’s what is happening in each marked element of the response:

- (a) When the automated code review is run, the CTO.ai Bot will post a comment with an analysis of the content of each change.

- (b) Some of the changes, like this one, may essentially be linter-style suggestions.

- (c) The automated code review correctly points out that if the IP address passed into the

buildAPIRequestfunction starts with127., it will append an empty string to the API query. In this case, that behavior is intentional, but it’s not obvious why from the code itself. - (d) The next potential issue raised by the review bot is one that could actually cause problems: unhandled rejected promises in a Node.js app! Here, the correct solution is also provided as a suggestion.

- (e) Makes a suggestion about an issue that’s often overlooked: lack of data input validation. When you have a simple app with a simple function that is only ever called from another function with its own input validation, input validation may not be needed! But what about six months from now when that simple function is being called in a dozen different places?

- (f) Finally, we’re presented with a suggestion that’s an accurate assessment of the code’s behavior; it’s just not useful, because the intention is to anonymize coordinates!

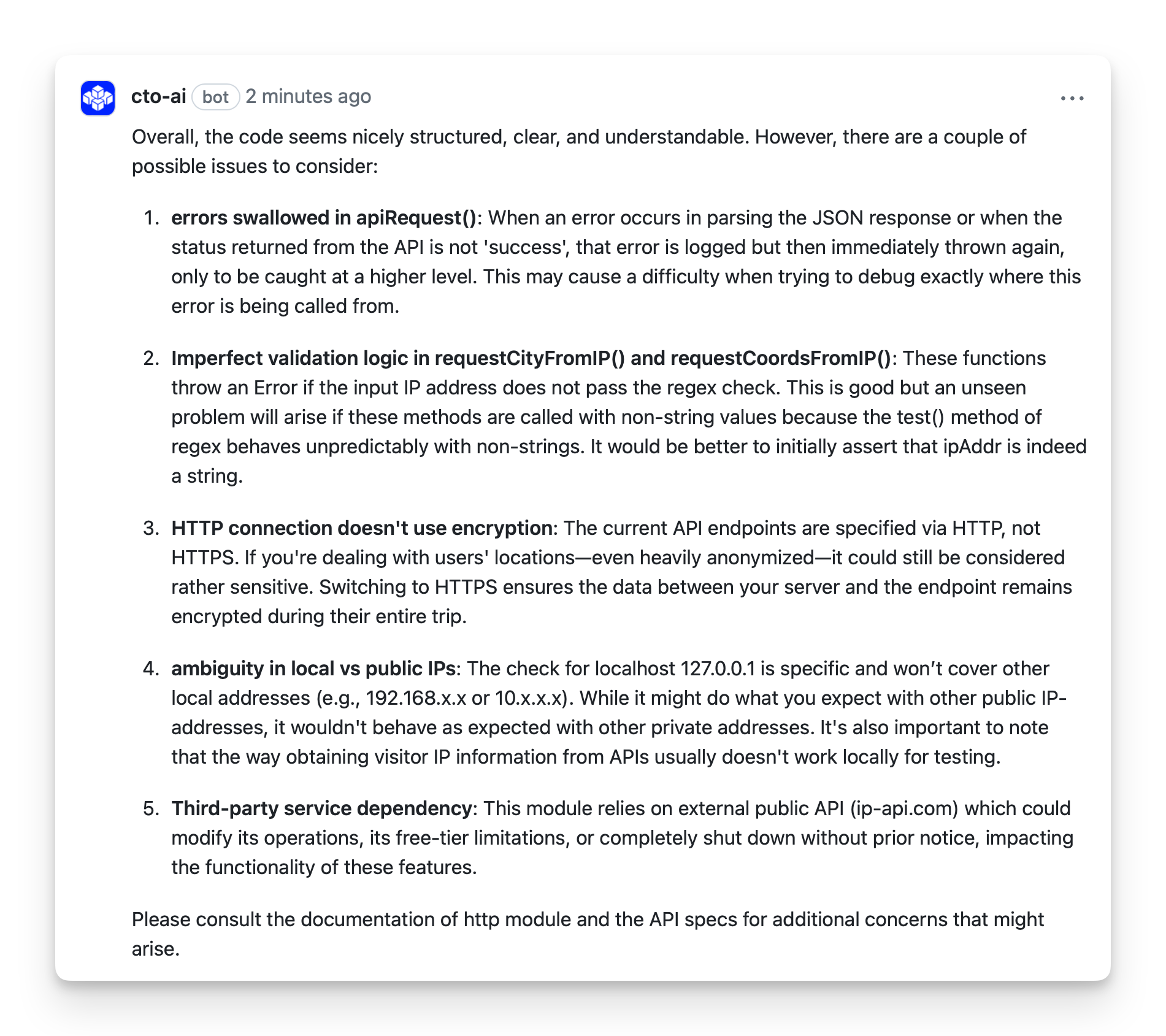

After making adjustments to the code based on the feedback from the automated code review, we can see that we have a much cleaner bill of health:

In the review of the revised code, the second point it raises is a valid consideration for implementing more effective valid logic. The other four point provided are all valid considerations that are applicable to any type of application that performs these types of operations—in other words, they’re useful considerations when you’re not writing an intentionally-simplified sample application.