In the software development lifecycle (SDLC), improving your CI/CD is key to optimizing your pipelines to streamline delivery of your applications to production. Engineering teams often get stuck in this process of choosing the best CI/CD practices, especially when it comes to maintaining test coverage across pipelines and configuring reusable builds with multiple contexts per workflow. Highly performant builds that load faster and interact smoothly have become not just a necessity but are expected by users when building CI/CD Pipelines that are resilient for their projects.

CTO.ai workflows allow you to maintain multiple pipeline jobs in a single code repository and customize which pipeline sections you want to test and validate. Configuring your CTO.ai workflows with Docker helps you build your code and test changes in any environment to catch bugs early in your application development lifecycle.

Prerequisites

The following are required to complete this tutorial:

- CTO.ai Account and CTO.ai CLI installed

- Docker installed on your Machine

- A GitHub account and GitHub Token

- DigitalOcean Account with Access Key and Secret Access Key

- Terraform Account and Terraform Token

In this post, I will walk you through how to build dynamic CI/CD pipelines using Docker and CTO.ai Workflows.

Here is a quick list of what we will accomplish in this post:

- Build a new

ops.ymlfile for the project - Configure new jobs and workflows

- Automate the execution of CDKTF to create DigitalOcean clusters and deploy the application.

Building Docker images on CTO.ai

Building Docker images on CTO.ai is simplified because CTO.ai supports Docker natively for its builds.

Dockerizing Workflows

Packaging a workflow in Docker is a powerful way to enforce the accurate dependencies and operating systems. In dockerizing your workflow, you must also ensure that your container images are correctly configured. CTO.ai enables you to dockerize your app using the Dockerfile and configure the pipeline jobs in the ops.yml file.

Getting Started with pipelines config using CTO.ai workflows

I will use this code repo and code as examples in this post. You can fork and clone the project to your local machine. After forking the repo, follow the steps below to get started.

Setting up CTO.ai Account and Secrets

- Before we set up our CTO.ai Account, we need to create your secret keys on DigitalOcean and Terraform. Sign up or log in to your CTO.ai Account when you are done.

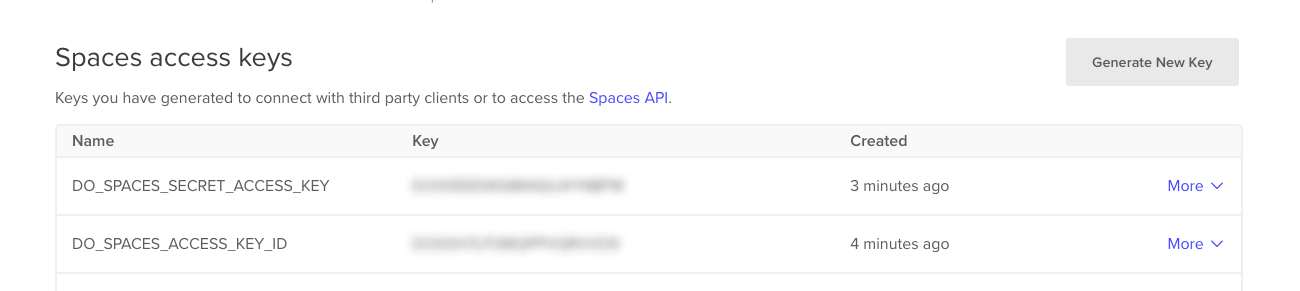

2. In your DigitalOcean dashboard, click on API, select Tokens/Keys, and generate new spaces access keys. These keys are the DO_SPACES_ACCESS_KEY_ID and DO_SPACES_SECRET_ACCESS_KEY that will be created from the token dashboard on DigitalOcean.

- DO_TOKEN is created in the Personal access token section on DigitalOcean.

- The Terraform Token (TFC_TOKEN) can be created from the API Token dashboard on Terraform Organization Settings.

3. In your CTO.ai dashboard, click on Settings, select Secrets, and paste your Secret Key and Secret Values.

4. Download the ops cli using npm install -g @cto.ai/ops

The CTO.ai Ops.yml Config

The Ops.yml is where you define your CI/CD related jobs, commands, and steps to be processed and executed. In this section, we will define our pipeline's steps, jobs, and workflows.

CTO.ai pipelines encompasses your workflows, which are a set of processes that let you run automated tests and build different sequences for your workflow. Setting up and deploying your infrastructure on CTO.ai is done using commands. You will see how we configured the command workflow for our environments in the repository.

Commands - represents the commands to run via shell. Commands lets you trigger interactive deployments, set up your environments, and deploy and destroy your infrastructure. Commands are a collection of configurations and steps that dictate what needs to happen in your resource environment. Let’s look at the Commands workflow in the ops.yml file.

Setup - DigitalOcean Infrastructure

In this workflow, we are setting up the Kubernetes infrastructure on DigitalOcean with a single command directly from the Ops command line.

version: "1"

commands:

- name: setup-do-k8s-cdktf:0.1.0

run: ./node_modules/.bin/ts-node /ops/src/setup.ts

description: "Setup Kubernetes infrastructure on DigitalOcean"

env:

static:

- STACK_TYPE=do-k8s-cdktf

- STACK_ENTROPY=20220921

- TFC_ORG=cto-ai

secrets:

- DO_TOKEN

- DO_SPACES_ACCESS_KEY_ID

- DO_SPACES_SECRET_ACCESS_KEY

- TFC_TOKEN

configs:

- DEV_DO_K8S_CDKTF_STATE

- STG_DO_K8S_CDKTF_STATE

- PRD_DO_K8S_CDKTF_STATE

- DO_DEV_K8S_CONFIG

- DO_STG_K8S_CONFIG

- DO_PRD_K8S_CONFIG

- DO_DEV_REDIS_CONFIG

- DO_DEV_POSTGRES_CONFIG

- DO_DEV_MYSQL_CONFIGThe version key specifies the current feature to use when running the pipeline.

- Steps: represents a collection of executable commands which are run sequentially during a job.

- Env: The

envis your environment variable which is passed to yourops.ymlfile - Secrets & Configs: These are encrypted environment variables that you pass in your

ops.ymlfile that stores the values of your resources like database password, production keys, etc.

Command - Manage Vault

This command lets us manage our secrets vault and configs.

- name: vault-do-k8s-cdktf:0.1.0

run: ./node_modules/.bin/ts-node /ops/src/vault.ts

description: "manage secrets vault"

env:

static:

- STACK_TYPE=do-k8s-cdktf

- STACK_ENTROPY=20220921

secrets:

- DO_TOKEN

configs:

- DEV_DO_K8S_CDKTF_STATE

- STG_DO_K8S_CDKTF_STATE

- PRD_DO_K8S_CDKTF_STATEYou can also configure commands to let it know what to do. Commands were made flexible to suit your workflow.

Configuring Pipelines using CTO.ai Workflows

pipelines:

- name: sample-app-pipeline:0.1.0

description: build a release for deployment

env:

static:

- DEBIAN_FRONTEND=noninteractive

- STACK_TYPE=do-k8s

- ORG=cto-ai

- REPO=sample-app

- REF=main

secrets:

- GITHUB_TOKEN

- DO_TOKEN

events:

- "github:github_org/github_repo:pull_request.opened"

- "github:github_org/github_repo:pull_request.reopened"

- "github:github_org/github_repo:pull_request.synchronize"

jobs:

- name: sample-app-build-job

description: example build step

packages:

- git

- unzip

- python

- wget

- tar

- bind:

- /path/to/host/repo:/ops/application

steps:

- wget https://github.com/digitalocean/doctl/releases/download/v1.68.0/doctl-1.68.0-linux-amd64.tar.gz

- tar xf ./doctl-1.68.0-linux-amd64.tar.gz

- ./doctl version

- git clone https://$GITHUB_TOKEN:x-oauth [email protected]/$ORG/$REPO

- cd $REPO && ls -asl

- git fetch && git checkout $REF

- cd application && ls -asl

- ../doctl auth init -t $DO_TOKEN

- ../doctl registry login

- CLEAN_REF=$(echo "${REF}" | sed 's/[^a-zA-Z0-9]/-/g' )

- docker build -f Dockerfile -t registry.digitalocean.com/$ORG/$REPO-$STACK_TYPE:$REF .

- docker tag one-img-to-rule-them-all:latest registry.digitalocean.com/$ORG/$REPO:$REF

- docker push registry.digitalocean.com/$ORG/$REPO-$STACK_TYPE:$REFIn the pipelines configs above, we have the name and the description of the pipelines. We also pass the GITHUB_TOKEN and DO_TOKEN secrets in the config file.

- In the static environment section, you specify your Stack type, ORG ID, REPO name, and GitHub branch.

- In the jobs section, we specify the packages we will install for the pipeline. Jobs are fully automated steps that define what to do in your application. Jobs also lets you install and manage packages to be installed in your CTO.ai workflow. You’ll need to manually install them inside the steps if they haven't been installed.

- In the steps section, we define the required steps for our pipelines. You can create subdirectories, export your given path, run the commands to install the DigitalOcean dependencies, clone the GitHub repository and fetch the GitHub sha. The

DOCTL_DL_URL=’https://github.com/digitalocean/doctl/releases/download/v1.68.0/doctl-1.68.0-linux-amd64.tar.gzcommand will update the workflow to the latestdoctlbinary by providing the URL. - The

tar xf ./doctl-1.68.0-linux-amd64.tar.gzwill extract the DigitalOcean command line for your workflow. - The

./doctl versionwill output the version of the DigitalOcean terminal - The

cd application && ls -aslstep will allow you to move between your application directory and list the files installed - The

..doctl auth init -t $DO_TOKENcommand will initializedoctlwith the token you specified in step 2. - The

../doctl registry loginauthenticates Docker to pull and push commands to your registries. - The

docker build -f Dockerfile -t registry.digitalocean.com/$ORG/$REPO-$STACK_TYPE:$REF .command will build the Docker image the Dockerfile with theorgrepo stackandrefwe specified in the static environment section. - The

docker push registry.digitalocean.com/$ORG/$REPO-$STACK_TYPE:$REFcommand step will push the layers of the Docker image to the CTO.ai registry using theorg,repo stackandrefwe specified in thestatic env.

Executing Pipelines

We looked at how to build an ops.yml file for your pipeline configuration above. Now let’s execute and deploy our pipelines.

- Back in your terminal, run the

ops run -b .command, which will compile the Dockerfile and set up your infrastructure on DigitalOcean.

- Enter the name of your environment, you can use

devas the name of your environment, enter the name of your application repo, and the target branch.

- Your workflow will be deployed, and you can see the progress on your terminal.

- After setting up your DigitalOcean workflow, you can trigger your pipelines to automatically check for errors and run automated tests on your source code. Trigger your pipelines and build the service using

ops build .

- The workflow will build, compile the jobs and run the pipeline workflow on your repository. In the CTO.ai pipeline dashboard, you will see your pipeline logs with a detailed overview of each step and how it was deployed on CTO.ai

- On each step you can see the packages that were installed on your registry, you will also see how Docker is building the images, fetching the packages, and exporting every layer to your manifest on DigitalOcean.

Let us know what you think!

Congratulations! You have just completed and levelled up your experience by building a new ops.yml file that executes IAC, runs CI/CD pipelines, and deploys your services using CTO.ai Workflows. This post explained and demonstrated some critical elements in the ops.yml file and how it relates to the CTO.ai platform.

CTO.ai workflows give developers more flexibility to create customised CI/CD pipelines that execute their unique software development processes.

Here’s the repo with the source code, and if you'd like to take it and run with it, you can go ahead and deploy it yourself to CTO.ai:

Don't forget your environment variables! :)

Till next time!

Comments