Code Review AI from CTO.ai is a new feature helping every development team rapidly get the feedback they need to stop small problems from snowballing into large ones—even teams that have yet to configure an effective test harness for their development process.

When it comes to elevating the quality of code produced by your entire team, one of the most important factors driving this improvement is the overall cadence of your code review cycle. Quicker reviews for new code lead to quicker development cycles, helping improve the quality of your code—which drives a faster lead time for change, a higher deployment frequency, and improved deployment reliability.

Code Review AI helps by checking your code for the types of errors that are most easily introduced into a codebase, freeing the rest of your team to focus their efforts on a higher-level analysis of the impact of the changes made to your code.

Of course, we're focused on building features that deliver immediate value to our users, and this is just the beginning of our AI roadmap. Our AI features are in an alpha stage for now, and we want your feedback on how AI can help you improve your developer experience! If you're interested in applying AI technologies to enhance your Developer Experience, we'd love to hear from you and discuss how we can help remove friction from your development workflow.

Code reviews are an often-overlooked aspect of software development within a smaller team/company. That is why CTO.ai’s new Code Review AI feature is so valuable. It can quickly provide that syntax-level linting and code logic review to help root out any overlooked issues that can quickly derail a deployment; all so I can focus on the larger features’ viability and polish during my review. Since each review’s scope is limited to that of the pull-request, I know that my code is both private and secure. In my opinion, this is a great use of the ever-growing artificial intelligence and large-language model technology to both support and improve my team’s code quality.

Doug Niccum

Software Engineering Manager, Schier

Why

Your Developer Experience shapes how your team collaborates within its entire development lifecycle, and small improvements to DX can have a huge impact on your team’s effectiveness in the long run. To support the flexibility required for constructing your ideal Developer Experience—whatever that may look like!—we began with the primitives that form the basis of your workflow: Commands, Pipelines, and Services.

As we’ve built out our Developer Control Plane (DCP), our goal has been to help you build the Developer Experience (DX) that your team always wished it had, then help you measure its effectiveness—not to assess the productivity of individual developers, but to understand where friction enters your development process. That’s where Insights and Reports come in: these measures of software delivery effectiveness help you understand how your performance changes as your development lifecycle evolves.

Now, we're adding intelligent features to augment and accelerate your workflow! And just like our other features, you only need to use the ones that actually enhance your workflow—anyone can make use of Code Review AI on its own, without needing to build and measure their infrastructure through the CTO.ai platform.

How

Anyone is welcome to try out our Code Review AI feature today through our GitHub integration. After connecting your GitHub account to CTO.ai, you’re ready to test it.

Begin by creating a new Pull Request in a repo associated with the GitHub account or organization that was linked to CTO.ai. Then, you’ll need to create the appropriate label in the GitHub repository where your Pull Request was created; this label should have the text CTO.ai Review.

Finally, add the newly-created CTO.ai Review label to trigger the Code Review AI Bot to review the pull request:

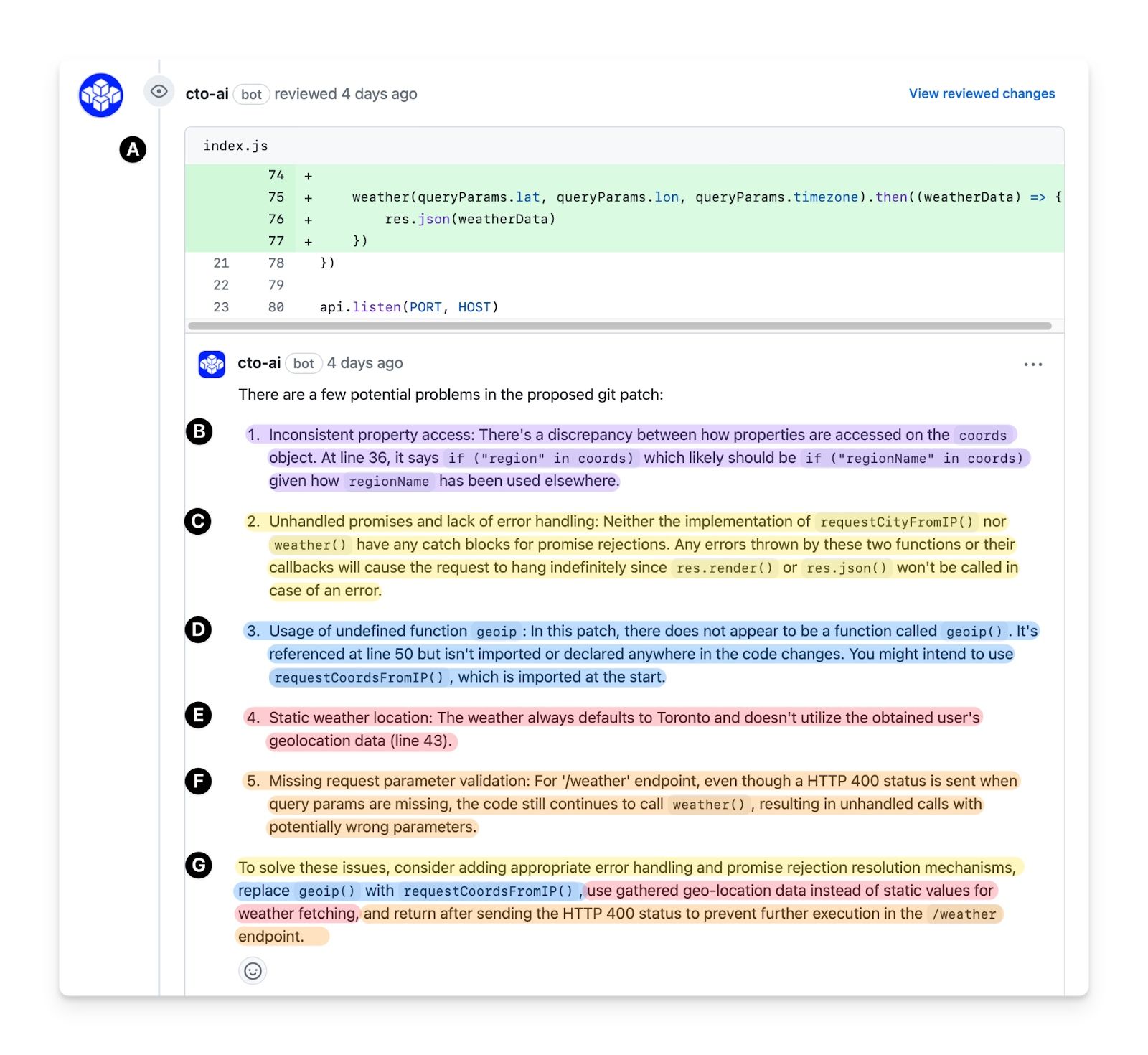

Now, each change added to the Pull Request will be reviewed for errors by the Code Review AI Bot! You can see below how minor mistakes—with the potential to cause larger problems at a later point in time—are caught by the automated review. Most notably, they are the types of errors that are easy to make and even easier to overlook:

Let’s break down this response: For each diff in your Pull Request, the CTO.ai Bot will post a comment analyzing its content.

- (A) For each diff in your Pull Request, the CTO.ai Bot will post a comment analyzing its content.

- (B) A potential issue with inconsistent property names is raised; in this case, when the name used to access the property was changed, it wasn’t updated in all places.

- (C) A potential issue with the logic of the application code is raised, pointing out a condition that could cause a request to hang indefinitely.

- (D) The review points out that a certain function doesn’t exist, and correctly identifies which function was likely meant to be called at this line!

- (E) Debugging code that makes its way into a production environment is always a problem, and in this case, the review bot identified when the code seemed to be run with a fixed set of parameters.

- (F) A lack of input validation is also highlighted, as it has the potential to produce surprising results when that code is executed.

- (G) Finally, all of the potential problems are summarized in a way that provides further guidance for resolving the errors.

It’s as simple as that. This automation feature isn’t meant to replace peer-review of code changes, provide you with advice about application architecture, or actively help you write code!

Instead, Code Review AI helps every development team quickly get actionable first-pass feedback that can be used to fix subtle problems before they ever make it to production—even when a team isn’t able to configure linting and test harnesses as part of their development workflow from day one.

What's coming next?

This is just the starting line for our roadmap. We don't want to tack on useless, gimmicky features-- just tools that can help your team augment and streamline your Developer Experience.

Down the road, we want to help you train and deploy private models that meet your needs and keep your data in your own hands. If your team is interested in leveraging these LLMs with your own data, our platform can help you. We can help you harness your data to train your own models that provide a more contextual and in-depth review process.

We believe AI tooling should support and enhance your development lifecycle, and our primary goal is always to help you remove friction from your Developer Experience. But general-purpose tooling can only get you so far!

And if you don't want to send any of your code to a third-party company, we get it—let's discuss how we can help you deploy your own models to enhance your team’s Developer Experience. For the specific needs of your team, we'll work with you to implement a fine-tuned model that keeps your data in your own hands.

Comments