In this tutorial, I will be explaining how to scale your pods in Kubernetes. If your application is stateless, you can horizontally scale it. Stateless means that your application doesn’t have a state, it doesn’t write any local files and it cannot keep any local session data.

If you have two pods and one pod is going to write something locally, then those pods will be out of sync and that means that each pod would have its own state. This is why you cannot kill it.

All traditional databases (MySQL, Postgres) are Stateful, meaning that they have database files that can’t be split over multiple instances.

Most web applications can be made stateless:

- Sessions management needs to be done outside the container. So if you want to get hits on your web application and you want to keep information about your visitors you need to use an outside service. You cannot store this kind of data within your container. You can use Memcache, Redis, or any database to store your sessions.

- Any file that needs to be saved cannot be saved locally on your container, because if you stop your container, and start it again, the files can be lost. You need to also save any files you want to save outside your container, either on shared storage or on an external service. AWS is also an option, where you can use S3 (which is object storage).

Scaling in Kubernetes can be done using the Replication Controller. The Replication Controller will ensure a specified number of pod replicas will run at all times.

A pod created with the replica controller will automatically be replaced if they fail, get deleted, or are terminated. If you tell Kubernetes to run five pods and there are only four running, because one node crashed for instance then Kubernetes will launch another instance of that pod on another node.

Using the Replication controller is always recommended if you just want to make sure one pod is always running even after reboots. You can then run a replication controller with just one replica.

To configure your Replication Controller on your application you can use the Dockerized Application we will create here. If you have your application already, feel free to jump to step 5

Prerequisites

Before we get started with the tutorial, I will show you how to push your Docker image to the Docker hub. You can use the containerized application we created in our previous tutorial on Deploying a Docker Application to AWS ECS.

- First, clone the repository and build the Docker image.

2. Create your repository on Docker Hub. A repository is always prefixed by your username, then the repository name. The repository you’ll be creating on Docker Hub is where your image will be pushed to.

- You can select here if you want your repository to be Public or Private.

3. Tag your image with your image ID using the docker tag <image_id> <repo_name> command.

If you’ve forgotten your tag, you can always check it out in your terminal using the docker images command.

4. When you are done tagging, push your image to the repository using the docker push <username/repo_name> command.

- This will take some time to upload. If you take another look at Docker Hub, you will see your image and you will see that it was pushed some seconds ago.

- In this Yaml file the

kindwas changed to replication controller. Our Replication controller also has a spec where we are telling the controller set that we will have 2 replicas. The Yaml file is attached in this gist for you to edit it to your specification.

apiVersion: v1

kind: ReplicationController

metadata:

name: expressapp-controller

spec:

replicas: 2

selector:

app: express-app

template:

metadata:

labels:

app: express-app

spec:

containers:

- name: k8s-demo

image: tolatemitope/express-app

ports:

- name: nodejs-port

containerPort: 50006. Create your Replication Controller using kubectl create -f <replication.yaml_file>

7. Look at the status of your container using kubectl get pods and you can see that your controller created two pods as we horizontally scaled our pod.

8. Describe your controller to get the container ID and volume mount using kubectl describe pod expressapp-controller-cc2b2

9. If one of those pods crash, the controller will automatically reschedule these pods. Remove any of the pods that were created using kubectl delete pod expressapp-controller-cc2b2

- We are going to see that the Replication Controller will create a new pod.The Controller will always make sure that the correct amount of pods is running.

10. You can scale your pods further using kubectl scale – replicas=4 -f <replication_file>

11. Confirm to see if it created the desired number of pods you specified using kubectl get pod As you can see, it has scaled it to four pods.

12. You can get the name of your replication controller using kubectl get rc

13. Another way of scaling your controller is using kubectl scale --replicas=1 rc/expressapp-controller

14. Here, you can see that your controller terminated three pods and left only one.

15. You can delete your replication controller using kubectl delete rc/expressapp-controller

- Now, the controller has been deleted and the last pod will be terminated.

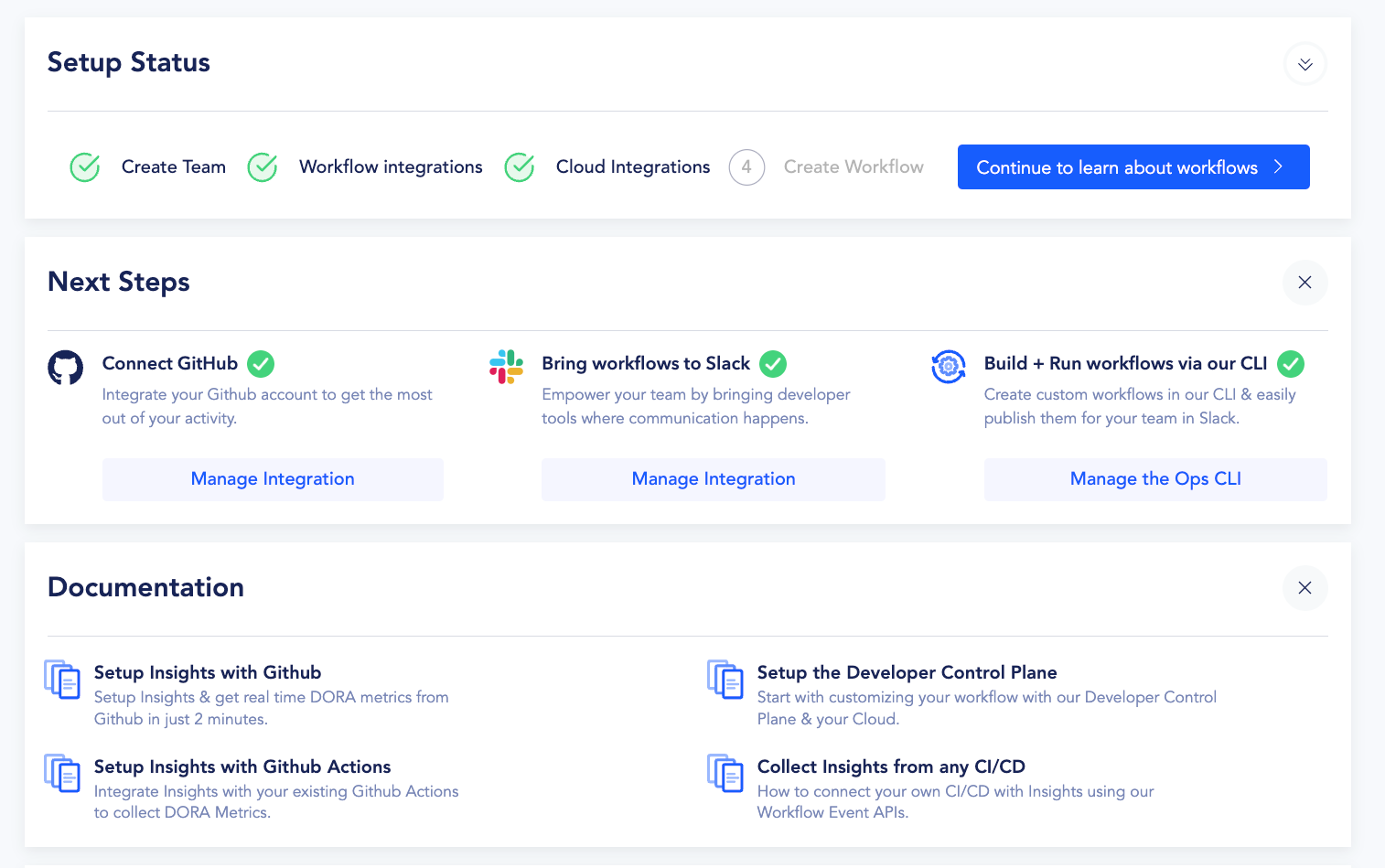

Looking for a Developer Control Plane for your Nodes and Replica Set?

Now that you’ve understood how to set up and scale your pods using Kubernetes Replication Controller, check out how you can leverage the CTO.ai developer control plane to deliver your Kubernetes replica set and Docker images with an intuitive PaaS-like CI/CD workflow that will enable even the most junior developer on your team to deliver on DevOps promises.

Comments