In traditional networking, applications and services talk to each other over IP addresses. IP addressing can be changed, and you might lose connections and incoming requests when it’s modified. It’s better to use DNS in the Networking world. DNS (Domain Name System) gives you a name to resolve to the IP address and enables you to simplify operations and save costs by sharing an ALB across multiple applications and services in your Kubernetes cluster.

The AWS Load Balancer Controller manages your AWS Elastic Load Balancer in your Kubernetes cluster. The Application load balancer will listen at the request level and be used in the HTTP or HTTPS protocol, and route connections based on information at the request level. For example, using an application load balancer, you can set up path-based routing in your URL to determine where and to which group of instances you want to send your connections to.

Before you can get started with this tutorial, create your Kubernetes Cluster by following this blog. If you have a cluster running already, you can jump to step 1.

Prerequisites

The following are required to complete this tutorial:

- kubectl Installed on your local machine

- Basic knowledge of Kubernetes deployments and Services

- Kubernetes editor like Lens

In this post, I will walk you through how to configure and deploy an Application Load Balancer on AWS Elastic Kubernetes Service (EKS)

Ingress Fundamentals

Kubernetes makes it easy to scale and deploy your applications. Kubernetes consists of one or more pods, and if you want traffic to flow through your pods, you will need to configure a Cluster IP service. In the long run, If you want external traffic to come into your pods, you’ll have to configure a NodePort Service which will expose a NodePort, and by using AWS Route 53, you can map your domain host to your Loadbalancer in the cloud, which will load balance incoming traffic across multiple targets. In Route 53, all the traffic will move to the application load balancer, and from the ALB, the traffic will go to the Ingress service, which will then forward it to the correct host.

All the configurations are handled by the ingress.yaml file, which is deployed using the Ingress Controller. The ingress controller will create an application load balancer and configure all the listeners, which will route the traffic to our Pods depending on your domain host.

Steps

Enable Service Account IAM Provider

After creating your cluster, you need to enable the service account. This will provide the identity for all the processes that run in your pod. When your cluster service account is enabled, you can map it to your AWS Identity and Access Management (IAM) to grant access. In the command below, specify the name of the cluster and the region where your cluster is located in AWS

eksctl utils associate-iam-oidc-provider --cluster=ingress --region=eu-central-1 --approve

Create Cluster Role

The cluster role will be used by your service account which will create the Role-based Access Control (RBAC) roles for the cluster.

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.1.4/docs/examples/rbac-role.yamlThe cluster role and binding will be created, and you will be able to see the resources, API groups, and verbs that are allowed.

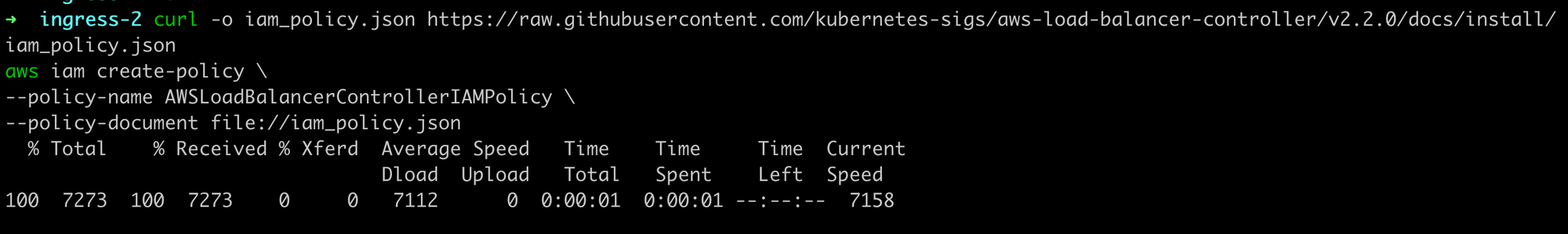

Create AWS IAM Policy

The AWS IAM Policy gives permission to the controller to create the ALB. This provides access to the service account. It is associated with the ALB resource and identity. It also defines the permissions for the AWS ALB.

curl -o iam_policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.2.0/docs/install/iam_policy.json

aws iam create-policy \

--policy-name AWSLoadBalancerControllerIAMPolicy \

--policy-document file://iam_policy.json

Create Service Account

Create your Service account for your cluster. The Service Account will be used with the cluster role. In the command below, replace the cluster name, region and AWS account name with your own details.

eksctl create iamserviceaccount \

--cluster=ingress \

--region=eu-central-1 \

--namespace=kube-system \

--name=aws-load-balancer-controller \

--attach-policy-arn=arn:aws:iam::<aws_account_number>:policy/AWSLoadBalancerControllerIAMPolicy \

--override-existing-serviceaccounts \

--approveDeploy Certification Manager

The Kubernetes certification manager adds certificates and certificate issuers as resource types in the Kubernetes cluster and simplifies the process of obtaining, renewing, and using those certificates with your services.

kubectl apply \

--validate=false \

-f https://github.com/jetstack/cert-manager/releases/download/v1.1.1/cert-manager.yamlInstall the Ingress Controller

The Ingress controller removes the complexity of Kubernetes traffic from your applications and provides a bridge between your Kubernetes services and external ones. With the Ingress controller, you can accept traffic from outside the Kubernetes platform and load balance it to your running pods. Once it’s deployed, it will also monitor your pods and automatically update the load-balancing rules when pods are modified from the service.

Next, install the ingress controller using the command.

curl -Lo v2_4_4_full.yaml https://github.com/kubernetes-sigs/aws-load-balancer-controller/releases/download/v2.4.4/v2_4_4_full.yaml

In line 731, replace the cluster name with the name of your cluster. Here, the name of my cluster is ingress.

- Next, apply and deploy the ingress controller using

kubectl apply -f v2_4_4_full.yamlYou will see all the role bindings, certificate manager, services, deployments, and ingress classes created.

Deploy your Deployment and Service Configurations

You can now deploy your Deployment and Service configurations to your Cluster. In this repo, I have a sample Nginx deployment file I’m working with. You can clone and deploy the deploy.yaml file using kubectl apply -f deploy.yaml this will create the Nginx deployment and Nginx Service in your cluster.In your Lens application, you can now see the deployment running:

- You will also see your Nginx Service running.

Deploy Ingress Configurations

Kubernetes Ingress is an object that allows you to access your Kubernetes services from outside the Kubernetes cluster. You can configure access by creating a collection of rules and paths that define which inbound connections reach which services and workloads. This lets you integrate and combine your routing rules into a single resource.

Configure your ingress configurations for your service and deployment. If you have a host you can input the values for the certificate-arn, listen-ports, ssl-redirect and the host You can use the direct configurations in the GitHub repository.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: instance

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:us-west-2:<acct number>:certificate/<cert value>

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'

alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

spec:

rules:

- host:

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-service

port:

number: 80- Back in your AWS Load Balancer console, you will see your ALB created with the Name, ARN, DNS name, State, type and Scheme.

- Copy the DNS name and paste it into your browser; you’ll see the new changes.

Your Kubernetes Application Load balancer is done!

Whew! If you can do all that, you are off to the races to build and deploy your own Application Load Balancer for your workloads!

If you’d like to see my example, you can check out the source code on GitHub

Good luck!

Comments