Get Started with CTO.ai Workflows

Our Workflows—in the form of Commands, Pipelines, and Services—can be used to build a cloud application platform that meets your needs.

Workflow SDK Runtimes

Our SDKs provide a straightforward way to run automated processes, send Lifecycle Event data to your CTO.ai Dashboard, and integrate with other systems. We currently offer Workflow SDKs with support for four different runtimes: Python, Node, Bash, and Go.

Anatomy of a Workflow

There are three main components to workflows of every kind:

ops.ymlconfiguration fileDockerfile(and a corresponding.dockerignorefile)- Your custom code

Our ops CLI provides the ops init subcommand to initialize a new Workflow and its corresponding scaffolding files.

Workflow Scaffolding

When you create a Workflow using the ops CLI, it also adds the basic files you might expect when building an application in the language you selected. Here is how all of these files work together, using a Bash-based Workflow as an example:

- The

main.shfile contains minimal demo code which prompts the user for input and sends a Lifecycle Event to the CTO.ai platform, both using our SDK. - The included

Dockerfiledefines your automation’s dependencies and runtime environment. - The

ops.ymlfile is used to configure how the Workflow is actually executed. Each Workflow defined in anops.ymlfile specifies the container to build and the steps it should run.

Each of our templates includes the basic scaffolding you need to start building a Workflow in the language of your choice. The files you can expect for each language’s Workflow template are listed in the table below:

| Common Scaffold Files | Bash | Python | JavaScript | Go |

|---|---|---|---|---|

ops.yml | ✅ | ✅ | ✅ | ✅ |

Dockerfile | ✅ | ✅ | ✅ | ✅ |

.dockerignore | ✅ | ✅ | ✅ | ✅ |

| Main code file | main.sh | main.py | index.js | main.go |

| Dependencies files | - | requirements.txt | package.json | go.mod |

Scaffolding for Pipelines and Jobs

When you initialize a Pipeline, only an ops.yml file will be created.

To generate scaffolding for your Pipeline Jobs, after you have defined one or more Jobs in your ops.yml file, you can run ops init . -j in the directory where your ops.yml file is located. A subdirectory for each Job will be created in ./.ops/jobs/, with the name of each Job being the name of each subdirectory.

Create a Workflow from a Template (ops init)

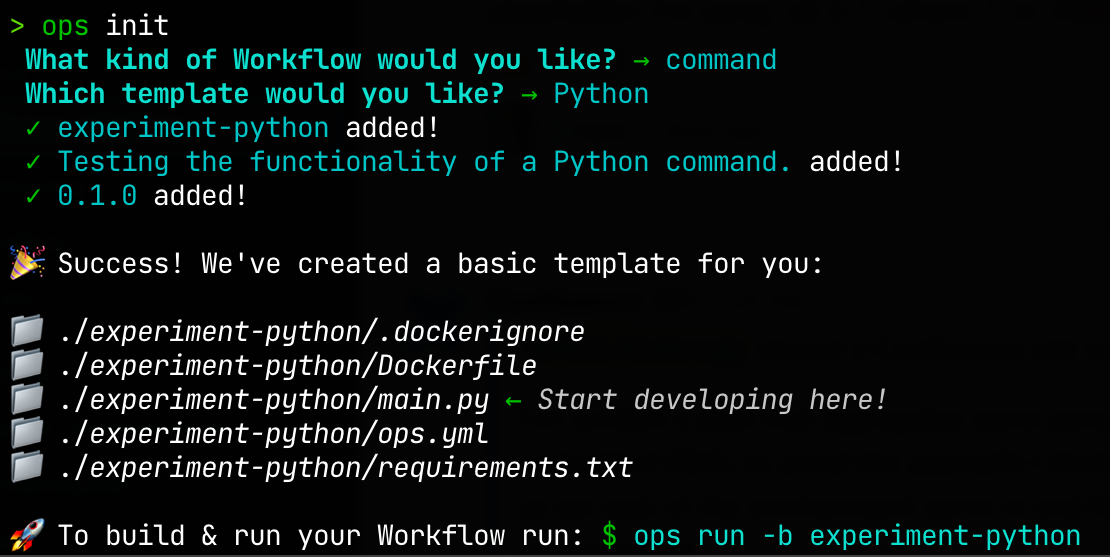

The CTO.ai ops CLI provides a subcommand to create scaffolding files for your workflow: ops init.

If you run ops init without any arguments, you will be interactively prompted for a name, the type of workflow you wish to create, a description, and a version number. This information will be used to generate scaffolding code—containing a basic template for your workflow—in a subdirectory of your current path: