Configuring Pipelines

Using CTO.ai Pipelines enables you to build automated workflows triggered by milestones in your development lifecycle or run on-demand by a member of your team—workflows which can then interact with other systems and each other. For more complex development processes, Pipelines can be triggered by Commands or other Pipelines; they can be used to deploy preview environments via Services, build and test deployment artifacts, or orchestrate infrastructure changes.

We currently offer Pipelines SDKs with support for Python, Node, Go, and Bash runtimes. Our SDKs provide a straightforward way to send Lifecycle Event data to your CTO.ai Dashboard, prompt users interactively, and integrate with other systems.

Structure of Pipelines Workflows

The CTO.ai ops CLI provides a subcommand to create scaffolding files for your workflow: ops init. You can use the -k flag to specify the kind as a pipeline workflow, as well as pass an optional argument to give the workflow a name (example-pipeline, in this case):

After you respond to the CLI’s interactive prompting, it will generate template code for your new Pipelines workflow.

Unlike what you might find in the scaffolding generated for Commands or Services workflows, the initial template for Pipelines only produces an ops.yml file. This is because the Jobs you define in your ops.yml configuration each act as isolated, containerized workflows that are run in sequence, and each Job needs its own scaffolding code.

In any ops.yml file, after you have defined one or more Pipelines Jobs (regardless of the type of template originally used), you can run ops init . -j in the directory containing your ops.yml file to generate scaffolding code for each Job. Separate template directories for each Job are created as subdirectories of .ops/jobs/.

For a deeper explanation of the ops init subcommand and the scaffolding generated by our CLI, our Workflows Overview document has you covered.

Pipelines Workflow Reference

To help you better understand how Pipelines workflows are structured, we have included an explanation of the scaffolding template generated by our CLI below. Let’s have a look at what is generated when we create a new Pipelines workflow with our CLI.

Create a Pipelines Workflow (ops init)

The CTO.ai ops CLI provides a subcommand to create scaffolding files for your workflow: ops init. You can use the -k flag to specify the kind as a pipeline workflow, as well as pass an optional argument to give the workflow a name (example-pipeline, in this case):

After you respond to the CLI’s interactive prompting, it will generate template code for your new Pipelines workflow.

Workflow Scaffolding Code

You might notice a major difference between this scaffolding for a Pipelines workflow and the scaffolding generated for Commands or Services workflows; in particular, the scaffolding for a Pipelines workflow only includes an ops.yml file. This is because a Pipelines workflow consists of multiple Jobs that can be run independently.

Creating Pipelines Jobs

To demonstrate how Jobs function in the context of a Pipelines workflow, we will use an ops.yml that differs a bit from what we have above:

Let’s take a look at what we’re specifying in the ops.yml file above. In this case, the Pipelines workflow we’ve defined includes two Jobs representing different steps you might find in a typical “build and deploy” pipeline. These Jobs define the steps that will occur and any dependencies necessary for running the Job, but we’re missing the containerized runtime environments you might have come to expect from other kinds of workflows.

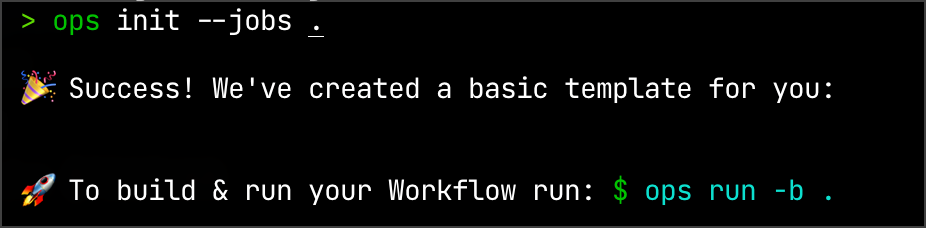

Before we can define our Jobs, we need to initialize them. This can also be done with the ops init command, while using the -j or --jobs flag from within the Pipeline directory:

After you run the ops init command specified above, you should receive a response confirming that a basic template has been created for you:

Within the newly-created .ops/ directory within your Pipeline’s directory, you’ll notice a file structure which should feel very familiar if you’re familiar with our Commands and Services workflows (the output of tree .ops is below):

The .ops/ directory contains a jobs/ directory with a folder for each of the Jobs defined as part of your Pipelines workflow. Within each of those subdirectories, you’ll find a Dockerfile, main.sh, ops.yml, and the other files that typically comprise a workflow.

Structure of Pipelines Jobs

If you have a look at these files, you’ll see how the Jobs definition from your original ops.yml file translates to the execution environment for each Job. For reference, here is the original job definition of the job we’ll examine, excerpted from the original ops.yml file:

Let’s break down how a Job from the original ops.yml file was translated into a containerized environment for running your custom code.

ops.yml Template

The definition under jobs of our workflow’s ops.yml file has been translated into a new workflow definition at .ops/jobs/example-pipeline-deploy/ops.yml, which implements a Commands workflow that runs what we’ve defined as the steps of this job.

Dockerfile and Dependencies

The Dockerfile defines the execution environment of the Job, and it runs the dependencies.sh script containing the dependencies we defined in our original ops.yml file.

main.sh Template

The array of strings specified under the steps for a Job is included as a line for each string in the main.sh script.

All Files

The files from the example-pipeline-deploy job are included in the following tabbed code box: